We have seen what can be learned by the perceptron algorithm — namely, linear decision boundaries for binary classification problems.

It may also be of interest to know that the perceptron algorithm can also be used for regression with the simple modification of not applying an activation function (i.e. the sigmoid). I refer the interested reader to open another tab.

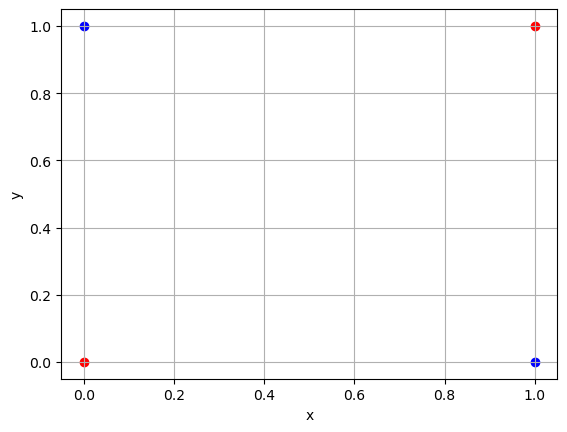

We begin with the punchline:

XOR

Not linearly separable in \(\mathbb{R}^2\)